Outreach

Recent highlights

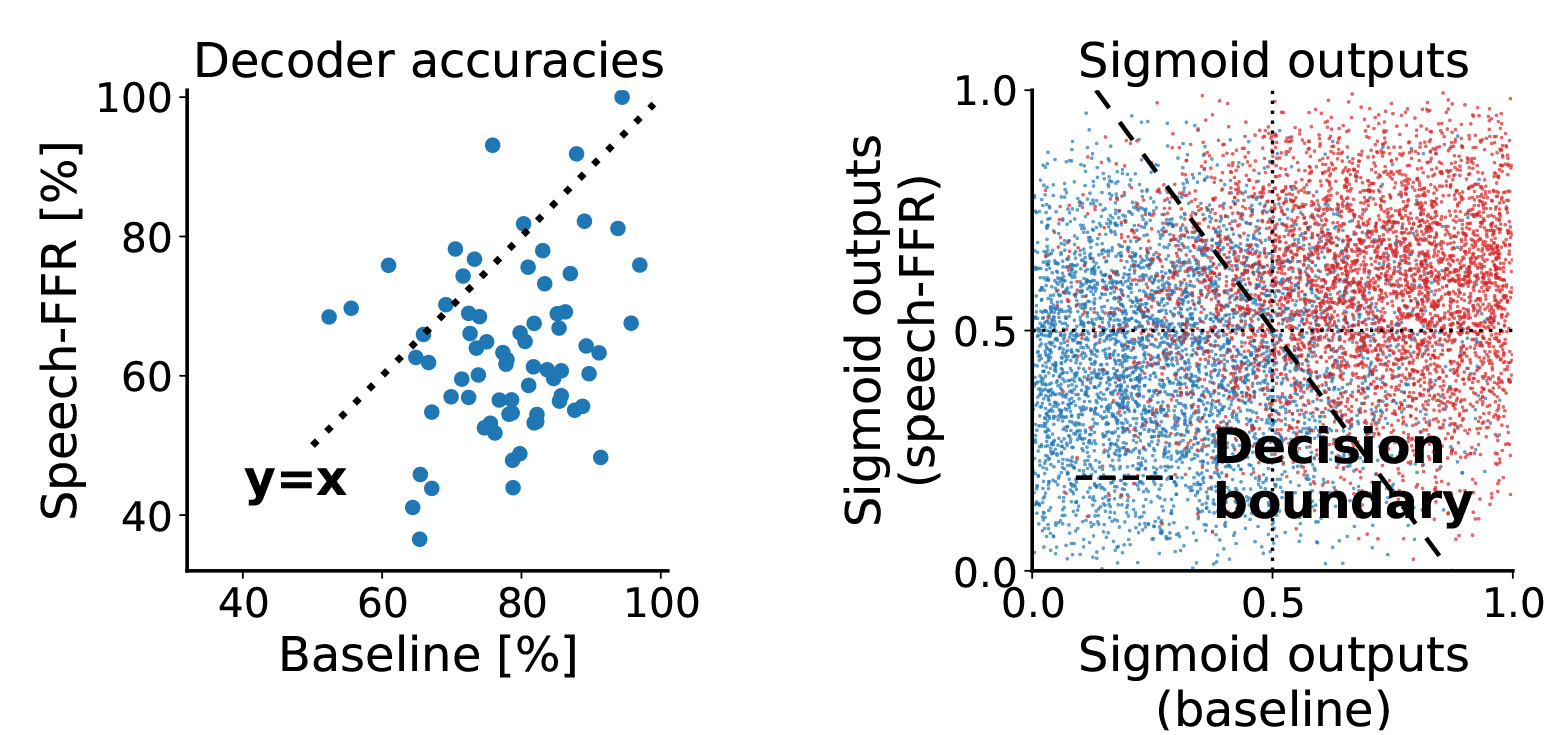

Better decoding of auditory attention through DNNs in patients with cochlear implants

Cochlear implants are amazing neurotechnological devices that can restore hearing in deaf or almost deaf people. However, they provide only a limited amount of information on the acoustic scene. As a consequence, their users experience major difficulties when trying to understand speech in lound environments such as when others talk simultaneously. If the target sound that a user would like to listen to was known, it could be selectively amplified in the cochlear implant to make it easier for the user to understand. Here we show that the focus of attention can be decoded from brain signals (EEG), and that DNNs achieve a better deocding accuray than baseline linear models.

C. Jehn, A. Kossmann, N. K. Vavatzanidis, A. Hahne, T. Reichenbach,

CNNs improve decoding of selective attention to speech in cochlear implant users,

J. Neur. Eng. 22:036034 (2025) [techRxiv][pdf]

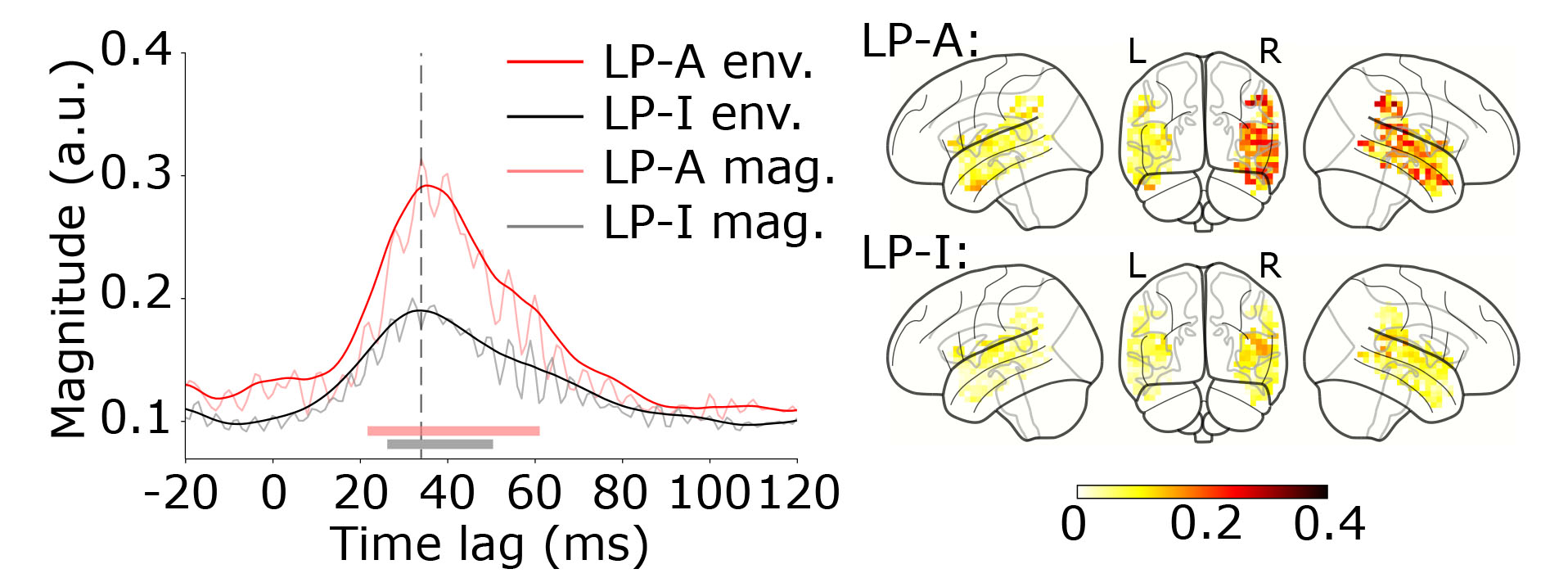

(No) influence of musical training on neural responses to speech

Musicians have been found to exhibit larger subcortical responses to the pitch of a speaker than non-musicians. These larger responses may reflect enhanced pitch processing due to musical training and may explain why musicians tend to understand speech better in noisy environments than people without musical training. However, higher-level cortical responses to the pitch of a voice exist as well and are influenced by attention. We show here that, unlike the subcortical responses, the cortical activities do not differ between musicians and non-musicians. The attentional effects are not influenced by musical training. Our results suggest that, unlike the subcortical response, the cortical response to pitch is not shaped by musical training.

J. Riegel, A. Schüller, T. Reichenbach,

No evidence of musical training influencing the cortical contribution to the speech-FFR and its modulation through selective attention,

[bioRxiv][pdf]

The complete MEG data is available on zenodo.org.

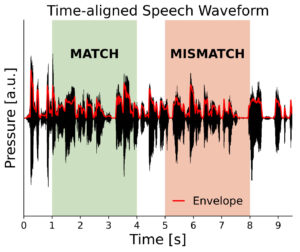

Match-mismatch decoding of speech-evoked EEG

Speech causes characteristic neural responses in the brain, both in the cerebral cortex as well as in subcortical areas. Determining relations between speech and the resulting neural activity measured noninvasively through EEG can help to reveal how our brains process the complex information in speech. The recent ICASSP Auditory EEG Signal Processing Grand Challenge therefore set out to explore how segments of EEG data matched and mismatched to a particular speech stimulus can be differentiated best. Our contribution used a combination of slow responses to the speech envelope and faster responses at the fundamental frequency and proceeded to win the challenge.

M. Thornton, D. Mandic, T. Reichenbach,

Decoding envelope and frequency-following EEG responses to continuous speech using deep neural networks,

IEEE O. J. Sign. Proc. 5, 700 (2024) [bioRxiv][pdf]

Attentional modulation of the cortical contribution to the speech-FFR

When we listen to two competing speakers, we can selectively attend one of them and understand that talker while ignoring the other one. Here we show that this selective attention to speech involves cortical responses at the fundamental frequency of a speech signal. We measure speech-evoked activities in the auditory cortex through magnetoencaphalography (MEG), and relate it to the fundamental frequency of a particular speaker. We then show that these neural responses are significantly larger when the speaker is attended then when they are ignored. Because the neural response occurs at a comparatively short delay of about 35 ms, its attentional modulation likely results from top-down feedback from higher processing centers.

A. Schüller, A. Schilling, P. Krauss, S. Rampp, T. Reichenbach,

Attentional modulation of the cortical contribution to the frequency-following response evoked by continuous speech,

J. Neurosci. (2023) 43:7429 [bioRxiv][pdf]

EEG-Speech decoding through combining envelope tracking with the speech FFR

We can learn about the neurobiology of speech processing through recording the brain activity of a person listening to speech through electroencephalography (EEG). The EEG responses then contain features of the speech signal, for instance its envelope as well as a frequency-following response to the temporal fine structure (speech-FFR), albeit at a low signal-to-noise ratio.

The recent ICASSP 2023 ‘Auditory EEG Decoding’ Signal Processing Grand Challenge addresses the issue of correctly relating an EEG signal to a speech stimulus despite the noise in the EEG signal. Our contribution won this challenge through an approach that combines the response to the speech envelope with the speech-FFR.

M. Thornton, D. Mandic, T. Reichenbach,

Relating EEG recordings to speech using envelope tracking and the speech-FFR,

ICASSP 2023 – 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2023), 1-2. [bioarxiv] [pdf]

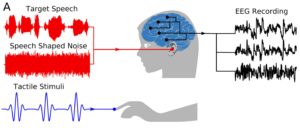

Speech enhancement through vibrotactile stimulation

Syllables are important building blocks of speech. They occur at a rate between 4 and 8 Hz, corresponding to the theta frequency range of neural activity in the cerebral cortex. When listening to speech, the theta activity becomes aligned to the syllabic rhythm, presumably aiding in parsing a speech signal into distinct syllables. However, this neural activity cannot only be influenced by sound, but also by somatosensory information. Here, we show that the presentation of vibrotactile signals at the syllabic rate can enhance the comprehension of speech in background noise. We further provide evidence that this multisensory enhancement of speech comprehension reflects the multisensory integration of auditory and tactile information in the auditory cortex.

P. Guilleminot, T. Reichenbach

Enhancement of speech-in-noise comprehension through vibrotactile stimulation at the syllabic rate,

Proc. Natl. Acad. Sci. U.S.A. (2022) 119:e2117000119. [pdf]

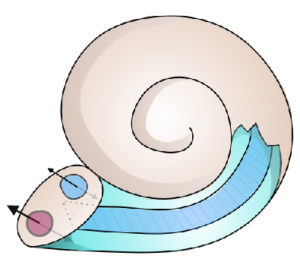

Drug transport in the cochlea through steady streaming

The mechanosensitive hair cells in the inner ear, or cochlea, are vulnerable to overstimulation, which can cause damage to the cells and sensorineural hearing loss. Pharmacological treatments to prevent hair cell damage or to restore the cells’ function after impairment are currently being developed. However, transporting the drugs to the hair cells has remained a major problem because the function of the cells relies on the intact encasing in the hard temporal bone. Here we show that steady streaming, a physical phenomenon that accompanies propagating waves, can be employed to transprt drugs from an entry point near the middle ear to the hair cells inside the cochlea.

L. Sumner, J. Mestel, T. Reichenbach

Steady Streaming as a method for drug delivery to the inner ear,

Scientific Reports (2021) 11:57. [pdf]

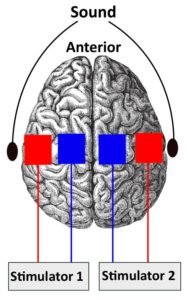

Enhancement of speech-in-noise comprehension through neurostimulation

Transcranial current stimulation can influence neuronal activity. As a striking example, current stimulation paired to a speech signal, with the current following the speech envelope, can influence the comprehension of speech in background noise. The speech envelope is thereby a slowly-varing signal with frequency contributions that lie mostly in the delta and in the theta frequency bands. Here we show that the modulation of speech comprehension results from the theta band, but not from the delta band. Moreover, we find that the theta-band stimulation without an additional phase shift improves the speech comprehension as compared to a sham stimulus.

M. Keshavarzi, M. Kegler, S. Kadir, T. Reichenbach,

Transcranial alternating current stimulation in the theta band but not in the delta band modulates the comprehension of naturalistic speech in noise,

Neuroimage (2020) 210:116557. [pdf]

Measuring speech comprehension from EEG recordings

If hearing aids could measure how well a wearer understands speech, they might be able to optimize and adapt their signal processing to enable the best user experience. Hearing aids can potentially measure brain responses to speech from electrodes, but how this can inform on speech comprehension has remained unclear. Here we report significant progress on this issue. By combining machine learing with an experimental paradigm that allows to disentable lower-level acoustic brain responses from neural correlates of the higher-level speech comprehension, we show that speech comprehension can be decoded from scalp recordings.

O. Etard and T. Reichenbach,

Neural speech tracking in the theta and in the delta frequency band differentially encode clarity and comprehension of speech in noise,

J. Neurosci. 39:5750 (2019). [pdf]

Decoding attention to speech from the brainstem response to speech

We are often faced with high noise levels: in a busy pub or restaurant, for instance, many conversations occur simultaneously. People with hearing impairment typically find it difficult to follow a particular conversation, even when they use hearing aids. Current aids do indeed amplify all the surrounding sounds, not only the target. If a hearing aid could know which speaker a user aims to listen to, it could amplify that voice in particular and reduce background noise. Here we show that a hearing aid can potentially gain knowedlege of a user’s attentional focus from measuring the auditory brainstem response from surface electrodes. We show in particular that short recordings, down to a few seconds, and a few scalp electrodes suffice for a meaningful decoding of auditory attention.

O. Etard, M. Kegler, C. Braiman, A. E. Forte, T. Reichenbach,

Real-time decoding of selective attention from the human auditory brainstem response to continuous speech,

Neuroimage 200:1 (2019). [pdf] [bioRxiv]

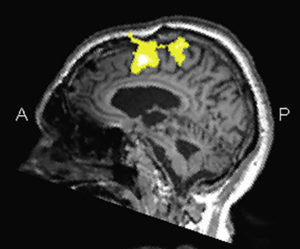

Neural responses to speech can help to diagnose brain injury

Brain injury such as following traffic or sports accidents can lead to severe disorders, including disorders of consciousness. This disorder is currently diagnosed through behavioural assessments, but this method fails when patients are not able to respond overtly. We investigated whether neural responses to speech as measured from the clinically-applicable EEG can aid to diagnose disorders of consciousness. We focussed on the neural tracking of the speech envelope that can index attention to speech as well as speech comprehension. We find that the latency of the neural envelope tracking related to the severity of the disorder of consciousness: patients in a vegetative state without signs of consciuosness showed neural responses to the speech envelope that were significantly delayed compared to patients that exhibited consciusness.

C. Braiman, E. A. Fridman, M. M. Conte, C. S. Reichenbach, T. Reichenbach, N. D. Schiff

Cortical Response to the Natural Speech Envelope Correlates with Neuroimaging Evidence of Cognition in Severe Brain Injury,

Curr. Biol. 28:1-7 (2018). [pdf]

How we can tune in to a voice in background noise

In order to focus on a particular conversation, listeners need to be able to focus on the voice of the speaker they wish to listen to. This process is called selective attention and has been extensively studied within the auditory cortex. However, due to neural feedback from the cortex to lower auditory areas, the auditory brainstem as well as the inner ear, these structures may already actively participate in attending to a particular voice.

We have devised a mathematical method to measure the response of the auditory brainstem to the pitch of natural speech. In a controlled experiment on selective attention, we have then shown that the brainstem responds stronger to the pitch of the voice that a person is listening to than to that of the ignored voice. Our findings demonstrate that the brainstem contributes already actively to selective attention. They also show that the pitch of a voice can be a powerful cue to focus on that voice, which may inspire future speech-recognition technology.

A. E. Forte, O. Etard and T. Reichenbach,

The human auditory brainstem response to running speech reveals a subcortical mechanism for selective attention,

eLife 6:e27203 (2017). [pdf] [bioRxiv]